This post is inspired by

I want a

good parallel computer

It is important to understand why the

PS3 failed. The perspective here was juniorish dev working on simulation and

rendering in triple-A. I remember less than I knew and knew less than most!

However, what I can provide here is the hindsight of someone who actually developed and shipped titles

1 on

the PS3.

I wanted the PS3 to succeed. To be more specific; I wanted Many-Core to succeed.

The PS3 failed developers because it was an excessively heterogenous computer;

and low level heterogeneous compute resists composability.2

More like Multicore than Many

The primary characteristic of Many-Core is, by virtue of the name, the high core count. Many-core is simply a tradeoff

that enables wide parallelism through more explicit (programmer) control.

| CPU | GPU | Many |

|---|---|---|

| Few Complex Cores | Wide SIMD | Many Simpler Cores |

| Low latency, OOO, SuperScalar | vector instruction pipe, High latency | RISC-like, scalar |

| Cached and Coherency Protocol | Fences, flushes, incoherence | Message passing, local storage, DMA |

| Explicit coarse synchronization | Implicit scheduling | Explicit fine synchronization |

At first glance, the SPEs of the PS3 fit the bill. They seem to have all the characteristics of Many-Core.

The

problem is that most important characteristic, that there is many cores, is significantly lacking.

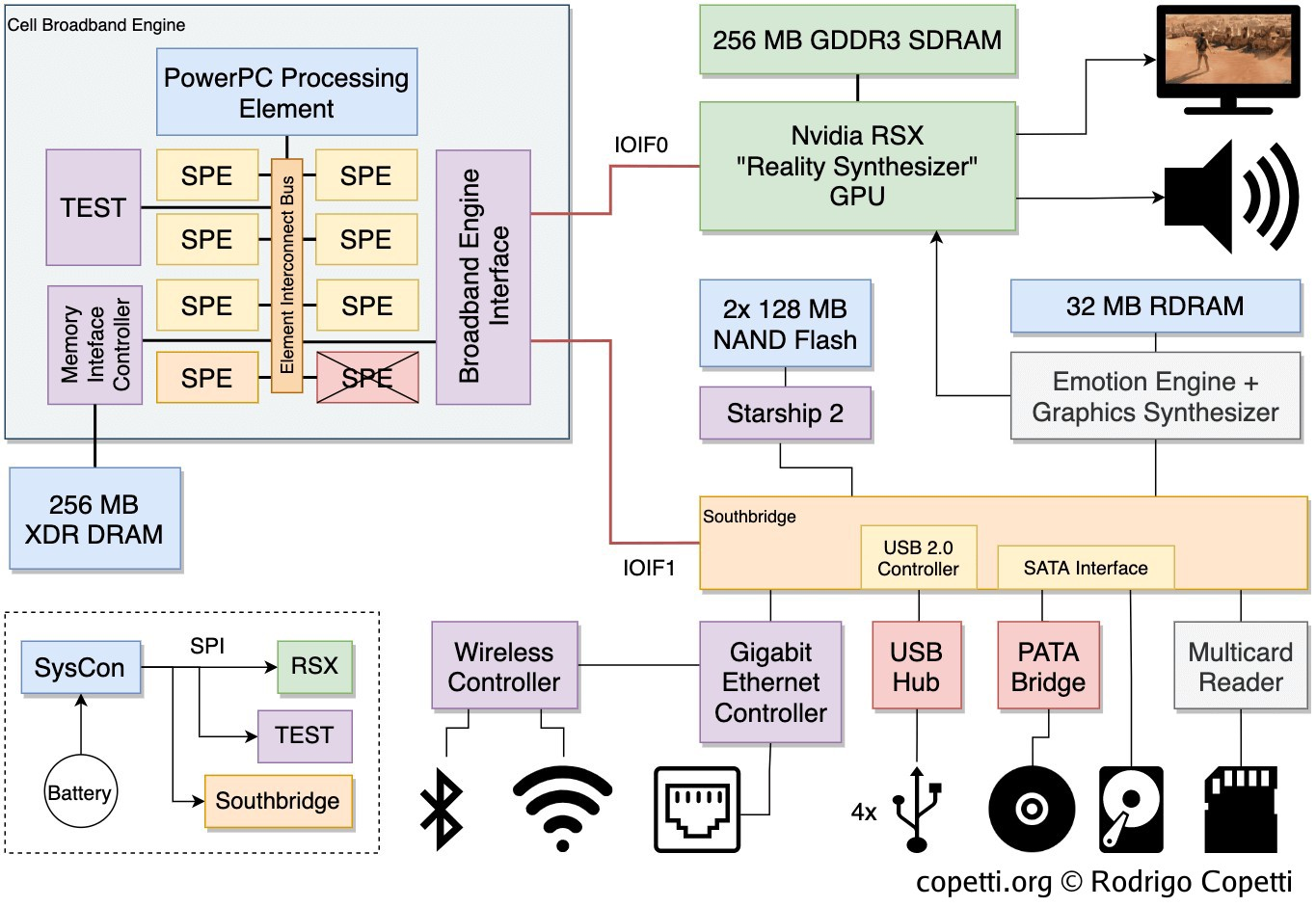

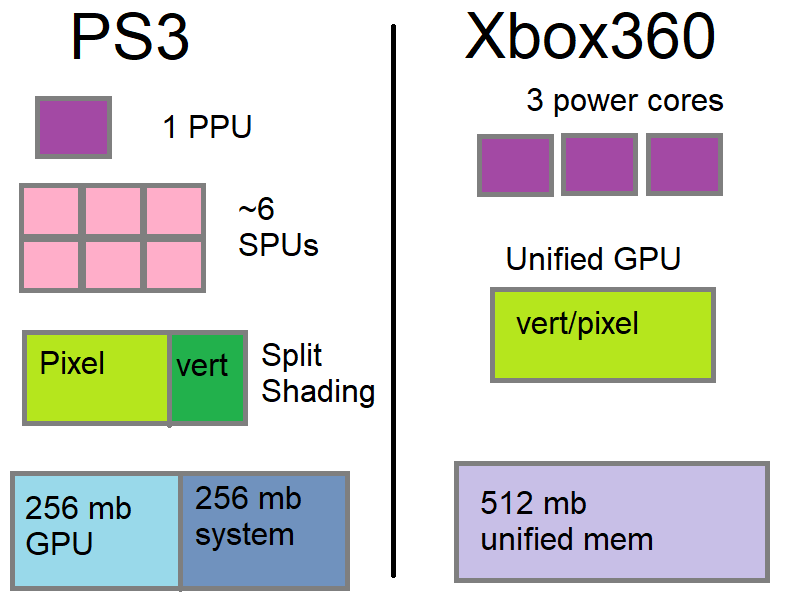

First off you didnt get the full 8 SPEs as a (game) developer. Out of the 8 SPEs one was disabled due to die yield

and the

OS got a core and a half. While this changed with updates one only really got 5-6 SPEs to work with. The Xbox360

in

contrast had what amounted to 3 PPEs (2 more). So the Cell really featured at most 3 more (difficult to use) cores

than the Xbox360.

Computationally Weak Components

The claim from wiki is a single SPE has 25 GFlops and the PS3 GPU has 192 GFlops. If

you

absolutely maxed out your SPE

usage you would still not even be close to the power of the underpowered PS3 GPU. For contrast the Xbox360

gpu had

240

GFlops. The GPU of the PS3 has separate Vertex and Pixel shading. In contrast, the XBox360 was shared

computational resources so it could load balance between heavy vertex shading vs heavy pixel shading. (Examples

here would be character skinning

vs UI rendering)

As a game developer these Flops numbers reflect the experience of developing on these respective platforms. This

was particularly noticeable in something like post processing where the demands of the vertex unit are very low

(large quads).

Due to the weakness of the GPU vertex unit developers would use the SPEs to do skinning.

The pixel shading unit did not have constants. So one would also have to do shader patching on the SPEs before

these

programs could be sent to the GPU. All of these things require synchronization between the CPU, SPE and GPU and

interact with workload balancing. In retrospect I also assume that dynamic branching in the shader was either

impossible or prohibitive so this is why everyone did excessive shader permutations. This means 10s of megabytes

of shaders. Again contrast this with the XBOX360 which supported wave

operations 3 and I even used this feature back in the day. Because each component of the PS3 is

weak on its own they all must be employed in concert to compete with (the) less heterogeneous platforms.

Computer Not Super

While the Cell could behave more like a supercomputer I saw it mostly used more like generic GPU compute. I never

saw production code that did anything but dispatch N jobs from the PPE. I never saw direct inter SPE communication

even though I recall such a thing was possible (mailboxes). This is similar to how GPU inter workgroup workloads

are more rare and difficult.

The hetrogenous nature was everywhere. Even the PPE was quite different from an SPE. The SPEs had only vector

registers; the PPE had fp, gp, and vector

registers. Is this really bad? No4, but it makes everything more heterogeneous and therefore more

complex. Getting

maximum performance out of these SPE units means that you were likely doing async DMAs while also doing compute

work. These nuances could be a fun challenge for a top programmer but ends of being more of an obstacle to

development for game studios.

Sharp Edges

The PS3 had 512 Mb total memory but 256 MB was dedicated to graphics and only had REDACTED Mb/s access from the

CPU. So

this means in addition to the 256 MB purely for graphics you would also have to dedicate system memory for

anything that was written to and from the GPU. The point here is inflexibility and heterogeneous nature.

The PS3 had cool features but these were never general purpose and could only be exploited by careful attention to

detail and sometimes significant engine changes. I recall using depth bounds for screen space shadowing and

one could probably use it for a few other similar gpu techniques (lighting). There was also the alluring double z

writes which is a one-off for depth maps if you dont actually use a pixel shader. I don’t recall all the edges,

but they were sharp and it meant performance cliffs if one strayed off them. The next section covers the sharpest

edge of them all.

The Challenge of Local memory

Of course the challenge that everyone knows about the SPEs is the constraint of memory access to local memory. You

got 256Kb but in reality once you factored in stack and program you were probably down to 128Kb. This

computational model is far more restrictive than even modern GPU compute where at least there you can access

storage buffers directly.

Most code and algorithms cannot be trivially ported to the SPE.

C++ virtual functions and methods will not work out of the box. C++ encourages dynamic allocation of objects but

these can point to anywhere in main memory. You would

need to map pointer addresses from PPE to SPE to even attempt running a normal c++ program on the SPE. Also null

(address 0x0) points to the start of local memory and is not a segfault to load from it.

So, instead of running generic code on the SPE, what developers did was write handcrafted SPE friendly code for

heavy

but parallelizable parts of their engine. With enough talent and investment you can eke out the full compute

power of the PS3.5 Of course this is maybe easier as a first party developer as you

can at least

focus on this exotic hardware and craft your engine and game features around the type compute available. This is

why Naughty Dog

famously came so close to showing us the full potential of the console.

Uncharted 3: Mobygames image

What could have been

Had the PS3 been what was originally designed it would have been a much more exotic but much less heterogeneous

machine.

The original design was approximately 4 Cell processors with high frequencies. Perhaps massaging this design would

have led to very homogenous high performance Many-Core architecture. At more than 1 TFlop of general purpose

compute it would have been a beast and not a gnarly beast but a sleek smooth uniform tiger.

One has to actually look at the PS3 as the

licked cookie

6 of

Many-Core designs. This half-baked, half-hearted attempt became synonymous with the failure of Many-Core.

I

used to think that PS3 set back Many-Core for decades, now I wonder if it simply killed it forever.

Refs

1 Some of

the titles I have worked on.

2 I will not provide a proof or reference but the mathematical issue is that the space is not covered

uniformly. This low level composability problem is often seen instruction selection when writing assembly.

3 The XBox360 gpu had wave operations like ifany/ifall that are similar to modern control flow

subgroup operations.

4 The fact that it was only vectors on the SPEs was present to the programmer due to loads/stores

having to also be vector aligned

5 PS3

SPE usage : it is clear that some games had higher utilization than others.

6 I am not sure my usage fits Raymond’s narrow specifications.

classic.copetti.org

Source for images and some technical specifications.