One of the most important classes goes by the humble name “P.” Roughly speaking, it encompasses all problems that can be solved in a reasonable amount of time. An analogous complexity class for space is dubbed “PSPACE.”

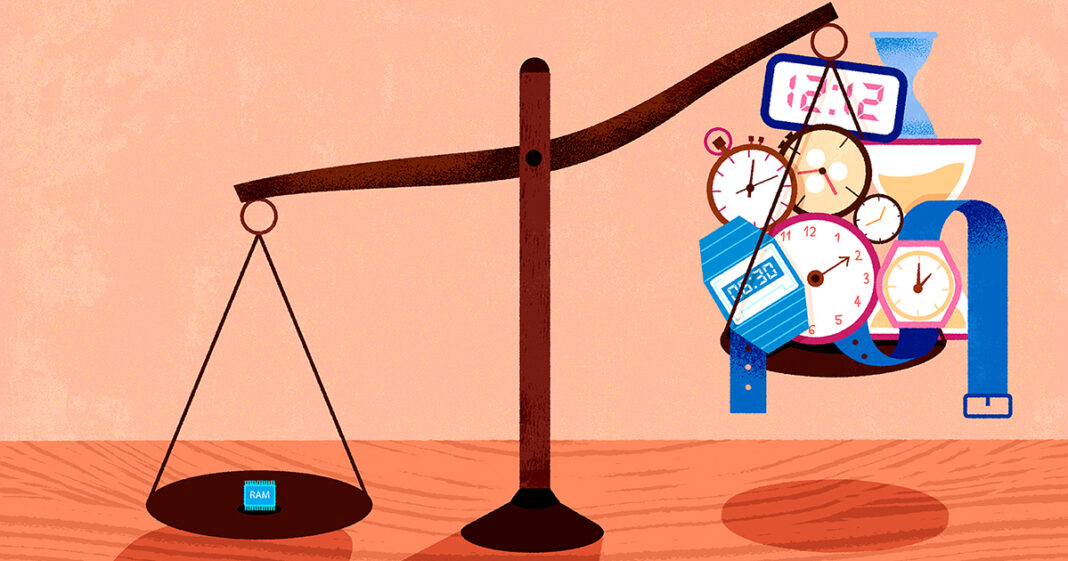

The relationship between these two classes is one of the central questions of complexity theory. Every problem in P is also in PSPACE, because fast algorithms just don’t have enough time to fill up much space in a computer’s memory. If the reverse statement were also true, the two classes would be equivalent: Space and time would have comparable computational power. But complexity theorists suspect that PSPACE is a much larger class, containing many problems that aren’t in P. In other words, they believe that space is a far more powerful computational resource than time. This belief stems from the fact that algorithms can use the same small chunk of memory over and over, while time isn’t as forgiving — once it passes, you can’t get it back.

“The intuition is just so simple,” Williams said. “You can reuse space, but you can’t reuse time.”

But intuition isn’t good enough for complexity theorists: They want rigorous proof. To prove that PSPACE is larger than P, researchers would have to show that for some problems in PSPACE, fast algorithms are categorically impossible. Where would they even start?

A Space Odyssey

As it happened, they started at Cornell University, where Hartmanis moved in 1965 to head the newly established computer science department. Under his leadership it quickly became a center of research in complexity theory, and in the early 1970s, a pair of researchers there, John Hopcroft and Wolfgang Paul, set out to establish a precise link between time and space.

Hopcroft and Paul knew that to resolve the P versus PSPACE problem, they’d have to prove that you can’t do certain computations in a limited amount of time. But it’s hard to prove a negative. Instead, they decided to flip the problem on its head and explore what you can do with limited space. They hoped to prove that algorithms given a certain space budget can solve all the same problems as algorithms with a slightly larger time budget. That would indicate that space is at least slightly more powerful than time — a small but necessary step toward showing that PSPACE is larger than P.

With that goal in mind, they turned to a method that complexity theorists call simulation, which involves transforming existing algorithms into new ones that solve the same problems, but with different amounts of space and time. To understand the basic idea, imagine you’re given a fast algorithm for alphabetizing your bookshelf, but it requires you to lay out your books in dozens of small piles. You might prefer an approach that takes up less space in your apartment, even if it takes longer. A simulation is a mathematical procedure you could use to get a more suitable algorithm: Feed it the original, and it’ll give you a new algorithm that saves space at the expense of time.

Algorithm designers have long studied these space-time trade-offs for specific tasks like sorting. But to establish a general relationship between time and space, Hopcroft and Paul needed something more comprehensive: a space-saving simulation procedure that works for every algorithm, no matter what problem it solves. They expected this generality to come at a cost. A universal simulation can’t exploit the details of any specific problem, so it probably won’t save as much space as a specialized simulation. But when Hopcroft and Paul started their work, there were no known universal methods for saving space at all. Even saving a small amount would be progress.

The breakthrough came in 1975, after Hopcroft and Paul teamed up with a young researcher named Leslie Valiant. The trio devised a universal simulation procedure that always saves a bit of space. No matter what algorithm you give it, you’ll get an equivalent one whose space budget is slightly smaller than the original algorithm’s time budget.

“Anything you can do in so much time, you can also do in slightly less space,” Valiant said. It was the first major step in the quest to connect space and time.

In 1975, Leslie Valiant helped prove that space is a slightly more powerful computational resource than time.

Katherine Taylor for Quanta Magazine

But then progress stalled, and complexity theorists began to suspect that they’d hit a fundamental barrier. The problem was precisely the universal character of Hopcroft, Paul and Valiant’s simulation. While many problems can be solved with much less space than time, some intuitively seemed like they’d need nearly as much space as time. If so, more space-efficient universal simulations would be impossible. Paul and two other researchers soon proved that they are indeed impossible, provided you make one seemingly obvious assumption: Different chunks of data can’t occupy the same space in memory at the same time.

“Everybody took it for granted that you cannot do better,” Wigderson said.

Paul’s result suggested that resolving the P versus PSPACE problem would require abandoning simulation altogether in favor of a different approach, but nobody had any good ideas. That was where the problem stood for 50 years — until Williams finally broke the logjam.

First, he had to get through college.

Complexity Classes

In 1996, the time came for Williams to apply to colleges. He knew that pursuing complexity theory would take him far from home, but his parents made it clear that the West Coast and Canada were out of the question. Among his remaining options, Cornell stood out to him for its prominent place in the history of his favorite discipline.

“This guy Hartmanis defined the time and space complexity classes,” he recalled thinking. “That was important for me.”

Williams was admitted to Cornell with generous financial aid and arrived in the fall of 1997, planning to do whatever it took to become a complexity theorist himself. His single-mindedness stuck out to his fellow students.

“He was just super-duper into complexity theory,” said Scott Aaronson, a computer scientist at the University of Texas, Austin, who overlapped with Williams at Cornell.